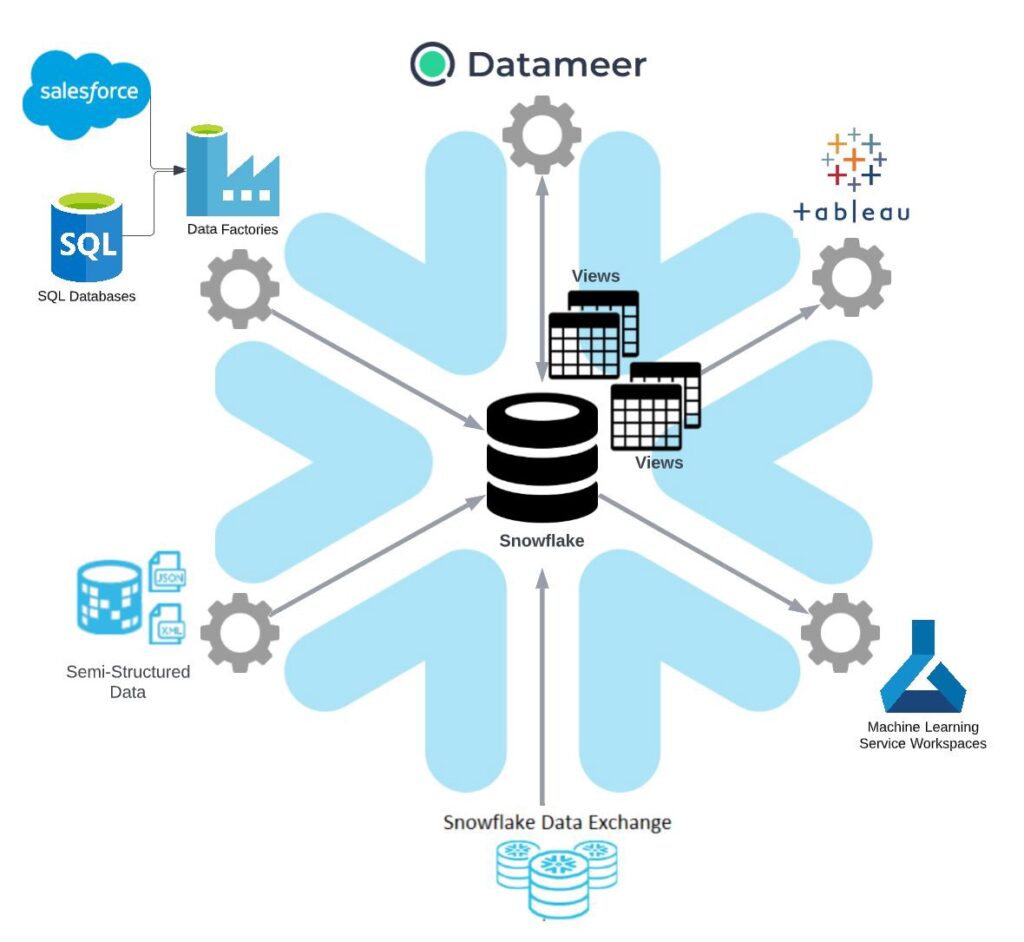

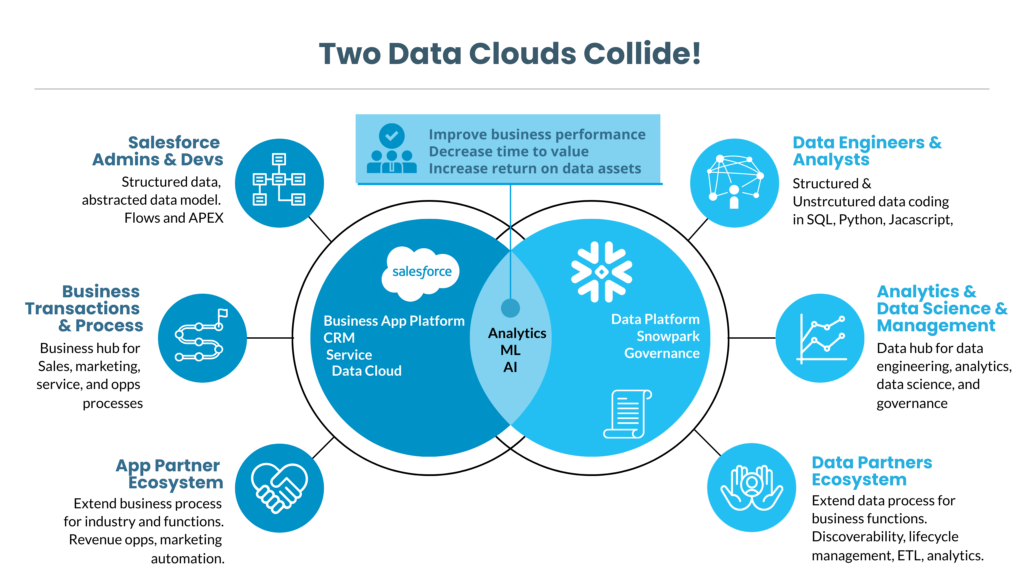

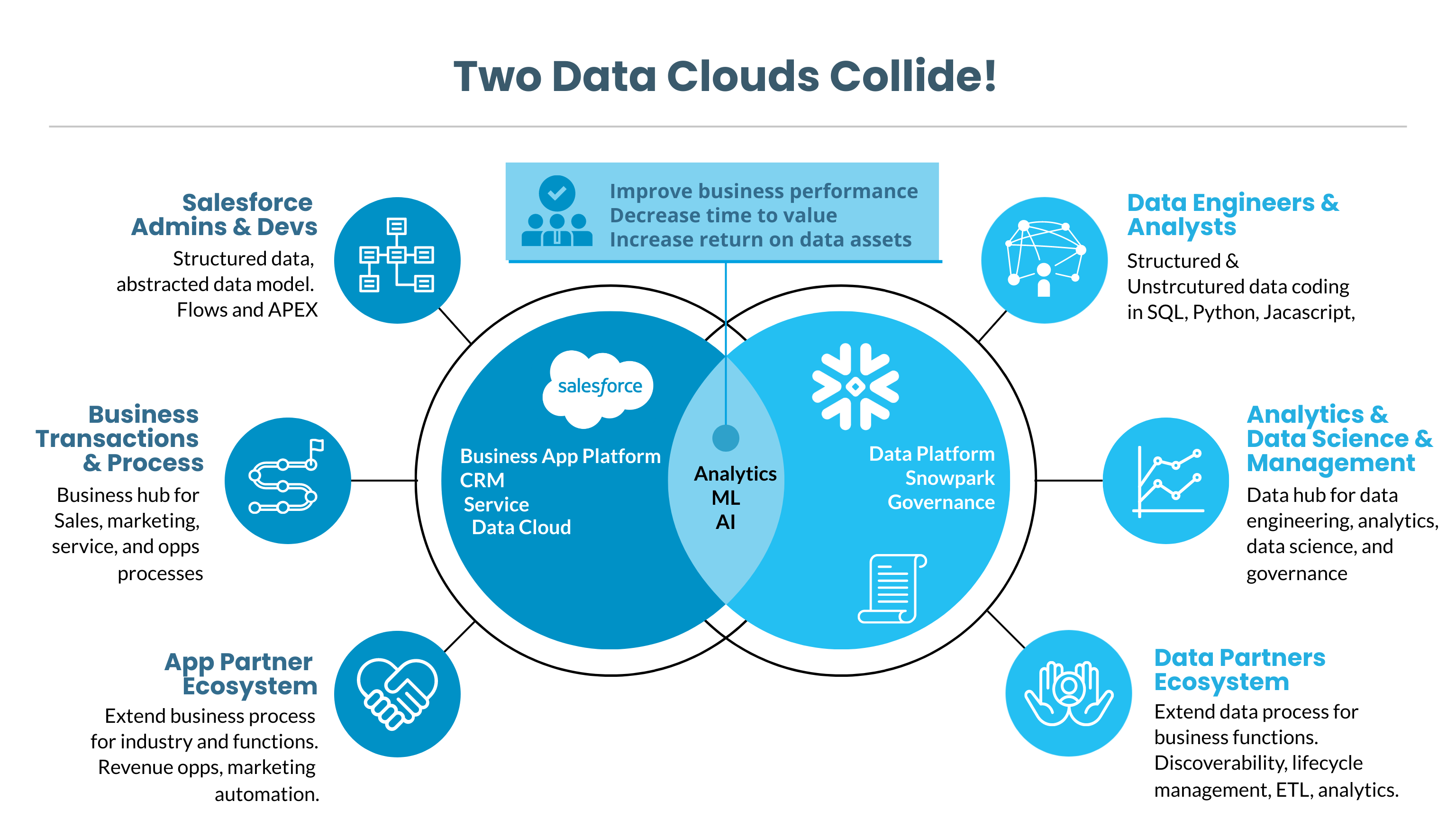

When it comes to optimizing your business processes and data analytics, Salesforce and Snowflake stand as two potent platforms, each with its own ecosystem of developers, stakeholders, and users. The Salesforce Snowflake Integration is an essential conduit that amplifies the bond between these two cloud platforms.

Native Salesforce Snowflake Integration: A Milestone in Native Data Sharing

Earlier this week, Salesforce and Snowflake made a groundbreaking announcement: the general availability of native Salesforce data sharing for Snowflake, via what is colloquially referred to as “BYOL” (Bring Your Own License). This is a significant advancement, especially for Snowflake users familiar with the benefits of zero-copy sharing, a core Snowflake feature. With this integration, gone are the days when you needed layers of additional software, services, and complex processes to bridge the two platforms. This is where the Salesforce Snowflake Connector comes into play, simplifying data access and queries between Salesforce and Snowflake.

Skill Enhancement through Certification Paths

Salesforce Data Cloud serves as a data hub orchestrating a wide range of business activities—be it CRM, marketing, or or any web/mobile activities. To encourage this, Salesforce recently launched its Certified-Data-Cloud-Consultant learning path. This will help Salesforce organizations readily find skilled professionals adept in Salesforce Snowflake Integration.

Salesforce Runs on Snowflake: Following the Leader

In a revelation that should add credibility and assurance to the Salesforce Snowflake Integration, Salesforce’s internal data and analytics have migrated to run on Snowflake. This shows Salesforce is not just advocating for the technology but using it themselves, setting the stage for rapid advancements in Salesforce and Snowflake connectivity.

Transforming AI/ML Workloads

The Salesforce and Snowflake partnership holds tremendous promise for accelerating the time-to-value from your Salesforce data assets. From curating data to deploying ML models, the integration, facilitated by the Salesforce Snowflake Connector, will enable enterprises to leverage their data in novel ways, including the utilization of advanced AI features. There are many first and third party powered solutions to weave your model deployment efforts.

Need Help Navigating these Waters?

We have been in front of Salesforce and Snowflake integrating analytics apps for years. We recreantly wrote the Salesforce data synchronization to Snowflake Guide and can’t wait to extend this into DataCloud. We have an incredible partner network that can help you implement any Salesforce or Snowflake Cloud components (CDP, MarketingCloud, Tableau).