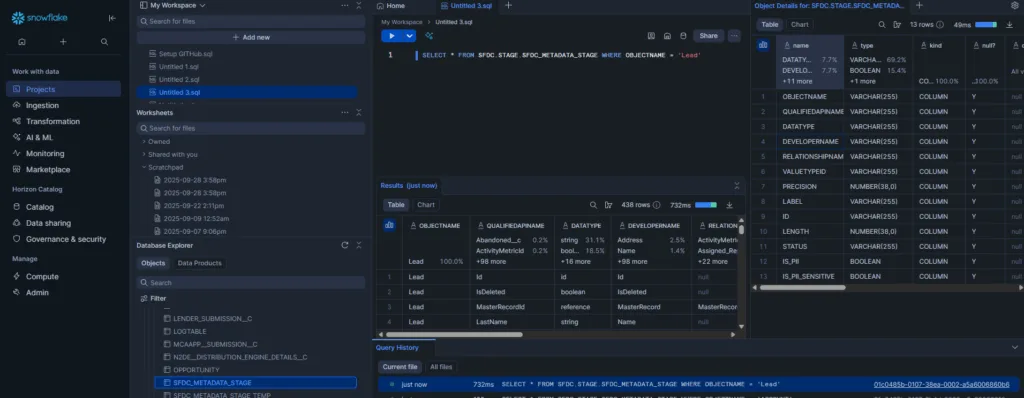

For the last 5 years, I have enjoyed deploying Snowflake workheets to data workers who wanted quick, secure access to curated data assets. I was recently sent scrambling to find alternatives to Snowflake workspaces for data worker roles who are not engineers by trade.

Worksheets were a quick and easy way to access data, administer Snowflake, and share SQL. Recently, Snowflake rolled out its next-generation Snowsight experience. The default SQL authoring experience shifted from a minimalistic editor to a IDE (integrated development environment) user experience.

Why are our users looking for an alternative to Snowflake Workspaces?

There are 3 reasons why customers have immediately asked me for an alternative to Snowflake Workspaces.

- Confusing and overwhelming user experience – My users are using words like “hate” and “complex” to describe their experience. After a few weeks, some have adapted but I have seen usage plummet.

- Sharing – The previous snowflake worksheets function had a native sharing function, allowing users to start with templates that were run-only. Overnight, users’ worksheets are fragmented and no longer shared.

- Errors and settings– A lot of worksheets that ran stopped when a worksheet database and schema were either deselected.

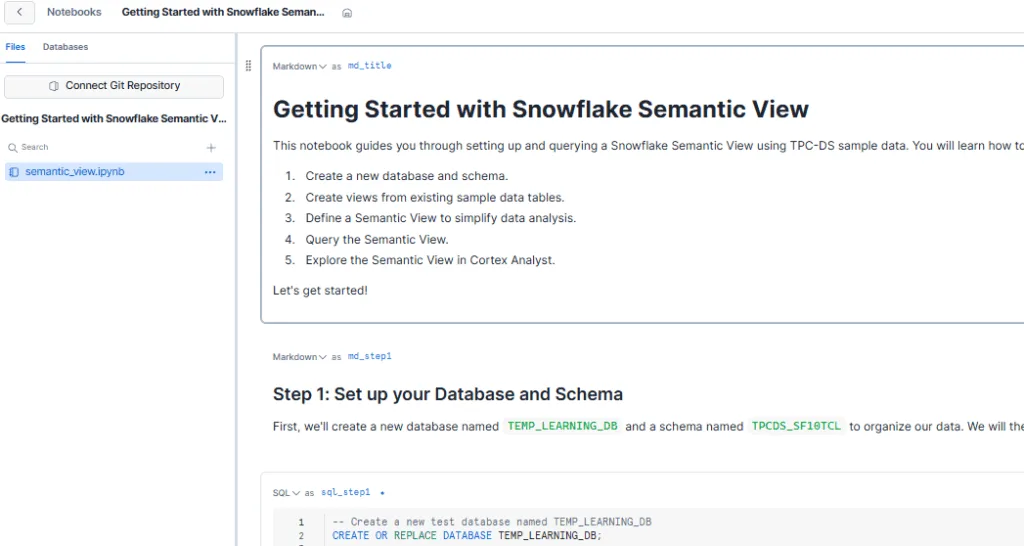

Shifting Dataworkers to Notebooks

The easiest path for data workers is to shift to Notebooks, which provides a clean and minimalist experience, with a linear, guided path through data / analysis. I personally love working in notebooks. The added benefit of source control with GitHub makes it a viable solution to control and share, but requires access control across tools.

As of Nov 2025, similar to Workspaces, there is no Snowflake-managed “sharing” function.

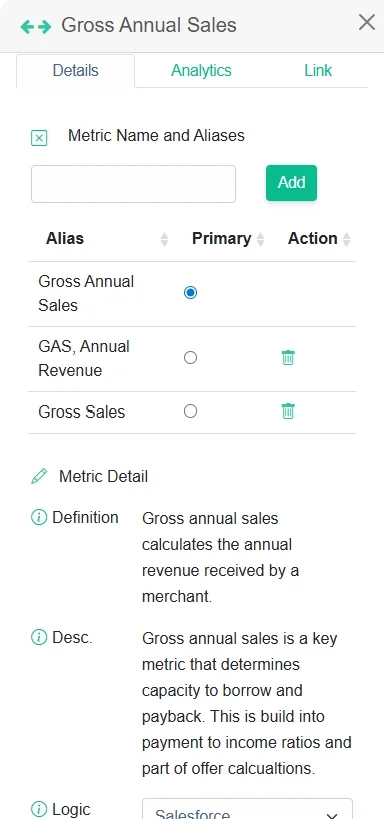

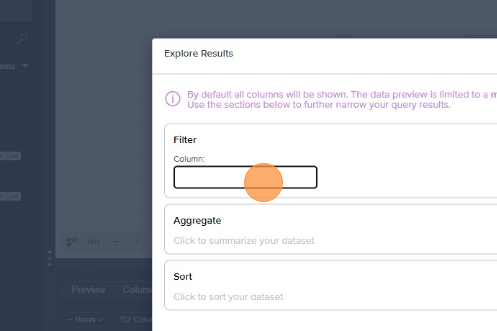

Marketplace: Streamlit App

I searched the marketplace looking for a solution and came up short. As a result, we built our own solution called DataTools Pro Explorer. We went the low-code route and created a point-and-click exploration tool to quickly access and refine data to the right grain and cut, allowing users to save and then modify the SQL as needed. We kept it very simple and released early. The feedback has been amazing, and we continue to improve and refine DataTools Pro Explorer every two weeks.

View and Install DataTools Pro Explorer in Snowflake Marketplace – It’s free! | View Documentation

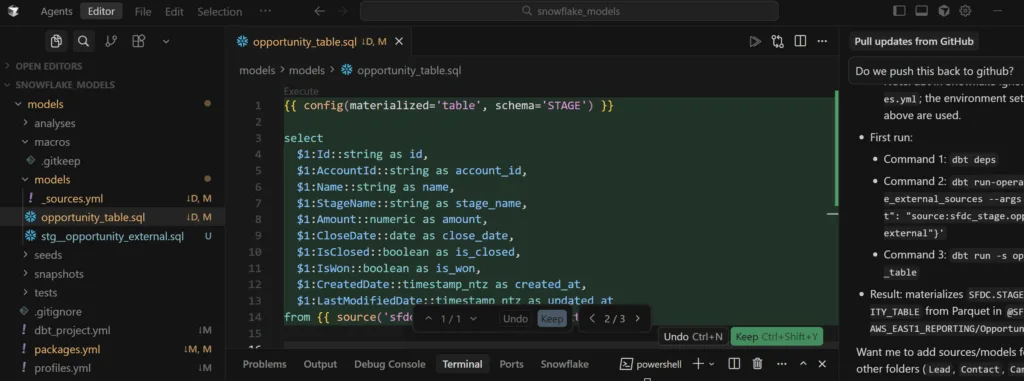

Moving from Cloud Themed IDE to Real IDE

We trust that Snowflake had plenty of data and interviews to support building a browser IDE UX for technical users vs the classic minimalistic SQL worksheet editor. DBT Cloud has a proven and battle-tested cloud experience. Frankly, we struggled getting it working for multiple clients. Now, we use DBT natively with Snowflake with clients.

Most of the engineers and data scientists we work with already use their preferred IDE to work with Snowflake. The DataTools Pro team officially moved from VS Code to Cursor as our standard IDE setup. That move with built-in AI co-pilot and agents has been a wonderful move.

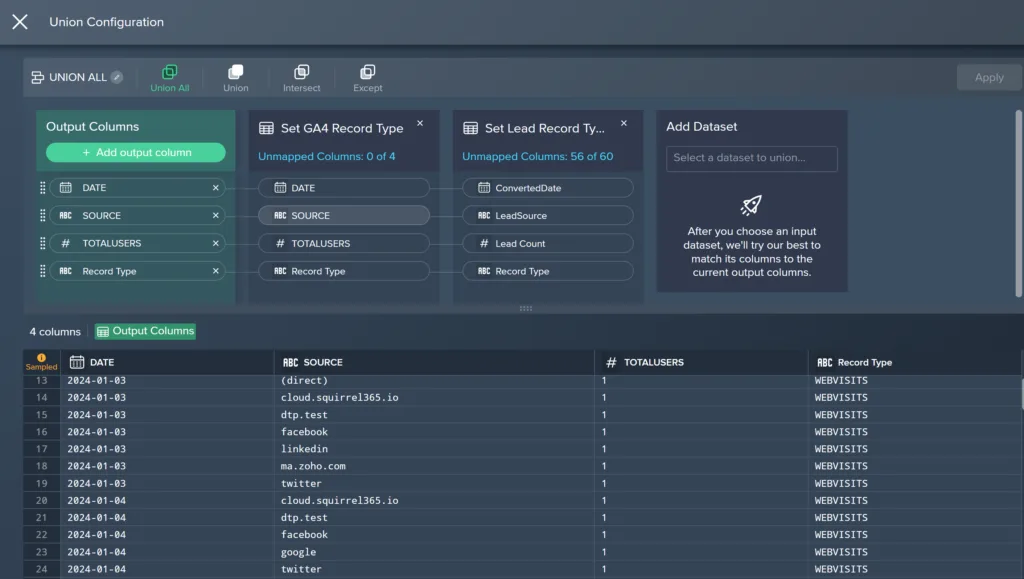

Moving Data Work out of Snowflake: Modern Data Delivery & BI Tools

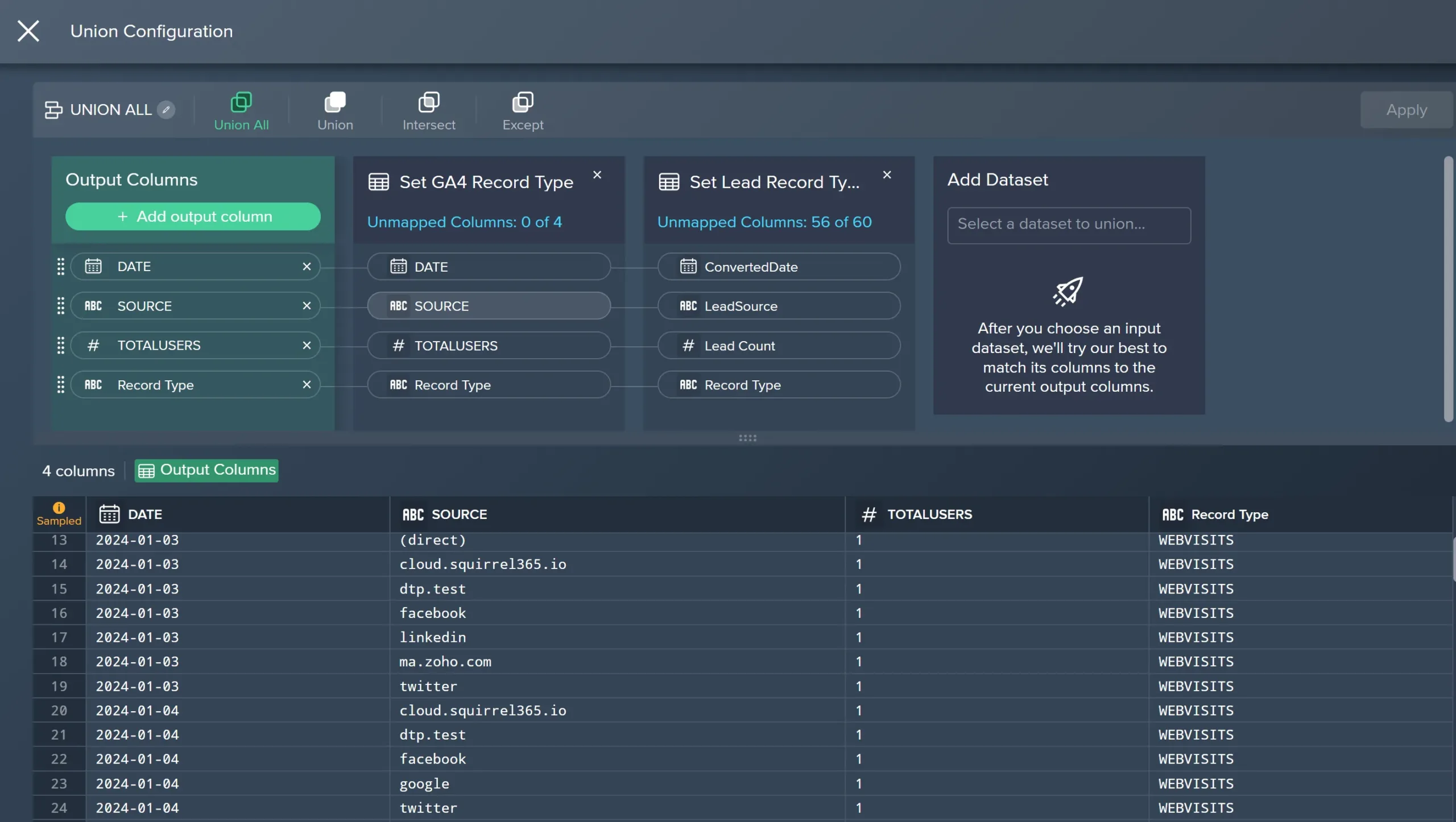

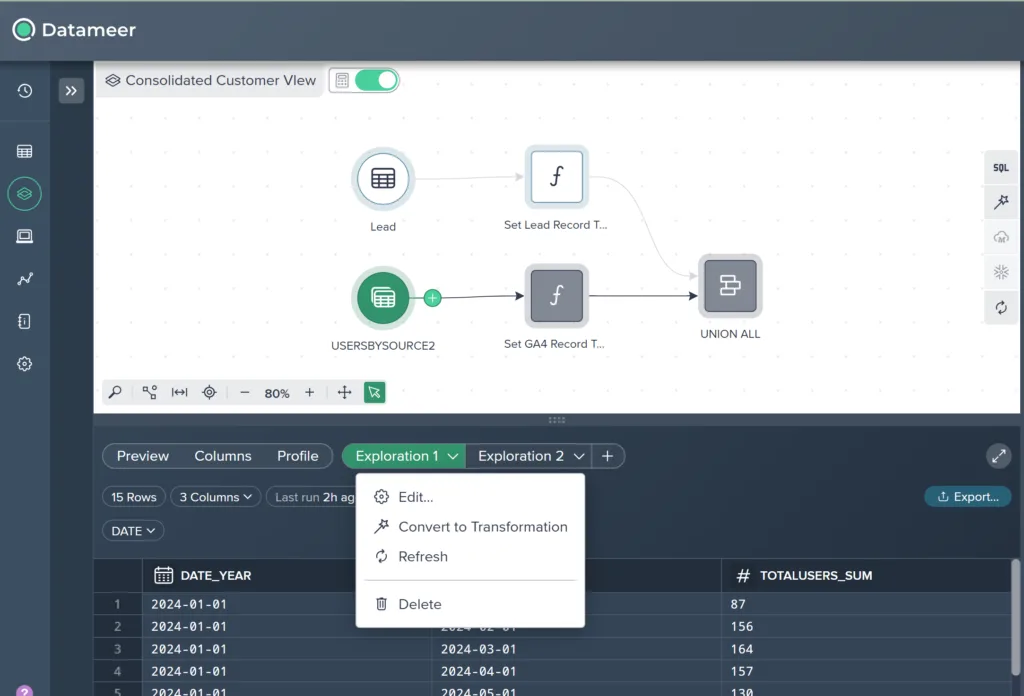

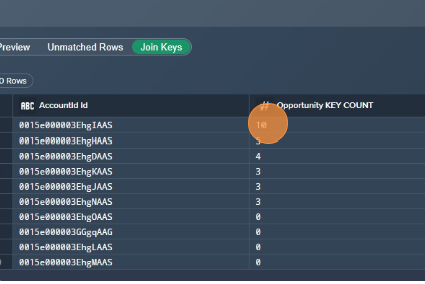

There is no shortage of BI tools purposely built for data workers to access, slice, and dice data. The reality is that Excel is the number 1, ubiquitous data tool for data workers. Modern BI platforms built for cloud lean in heavily and provide very powerful spreadsheet abilities. For data wrangling use cases, I am lucky enough to have Datameer at my disposal. lt remains our top data tool for end-to-end data delivery.

We use traditional enterprise-grade BI Platforms like Tableau and Power BI.. There is a wide range of modern analytics platforms like Sigma, Hex, and many others that were purposely built for cloud data platforms like Snowflake.

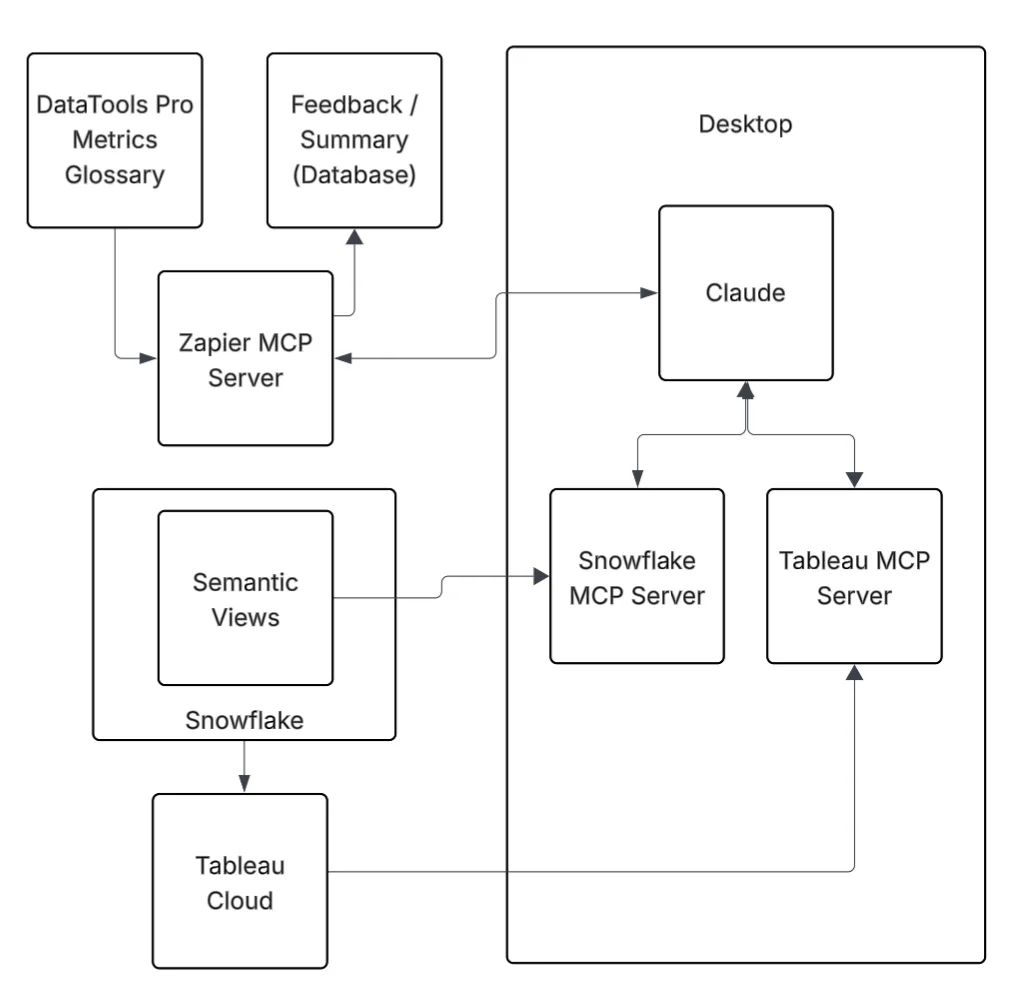

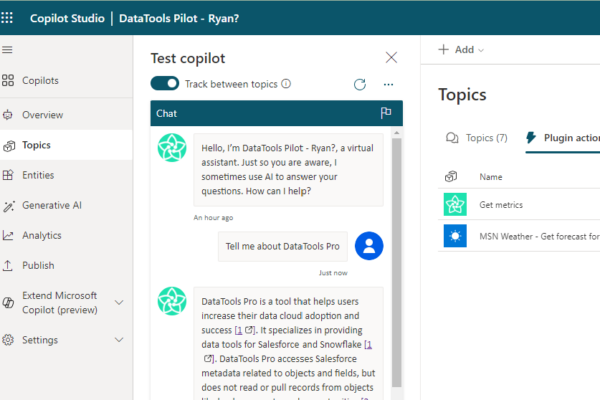

What about AI?

If you got to the end of this article wondering, “what about an AI chatbots”? I do believe there will be a time and place but I am cautiously optimistic.

Snowflake listens and evaluates its users and has already buttoned up some loose ends, navigating between Snowflake Horizon Catalogue and workspaces. I anticipate worksheets continuously improving. If you need help getting higher adoption from Snowflake, we are happy to help! Check out DataTools Pro Explorer in Snowflake Marketplace and let us know what you think… It’s free!