Managing data in Salesforce can feel like trying to solve a giant puzzle. As businesses depend more on data for decision-making, they face new challenges that can seem overwhelming. From handling massive amounts of information to ensuring that it is accurate and up-to-date, the tasks can quickly pile up.

Embracing basic data governance principles within your Salesforce organization focuses on 5 basic questions:

- Is data correct?

- Is data complete?

- Is data secure and compliant?

- Is data available?

If the answer is no, these challenges often stem from the complex nature of maintaining clear and precise data and meta-data processes. Mistakes and inefficiencies can lead to slowed decision-making, impacting a business’s ability to respond to changes and missed opportunities.

Finding solutions to these data management issues is essential for any company looking to harness the full power of their Salesforce data and meta-data. By addressing these challenges head-on, businesses can transform their data into a valuable asset.

Identifying Common Salesforce Data Management Challenges

Managing data in Salesforce can be complex with its many moving parts. Businesses frequently encounter issues that can slow down their operations and hinder effective decision-making.

The most significant challenge in Salesforce is maintaining data accuracy. As organizations grow, they accumulate vast amounts of data at the same rate that process and tech debt accumulate. Ensuring this data remains updated and free of errors is challenging. Errors, duplicates and outdated information can easily lead to misguided decisions.

Another common hurdle is data silos that that occur when data is extracted from Salesforce. This fragmentation can disrupt collaboration and make it hard to gain consensus and universal trust overview of business performance. Lack of integration between Salesforce and other systems can exacerbate this issue, hampering seamless data flow and creating gaps in analytics.

Security and compliance also pose significant challenges. Protecting sensitive information while ensuring compliance with industry regulations is critical. Inadequate security measures can lead to data breaches, compromising valuable customer information and damaging trust. Businesses must consistently update their security protocols to prevent unauthorized access.

Without a clear data management strategy, businesses may struggle to utilize Salesforce effectively, leading to inefficiencies in reporting and analytics.

To summarize, the main challenges businesses face in Salesforce data management include:

- Maintaining data accuracy and integrity.

- Overcoming data silos and integration issues.

- Ensuring security and compliance.

- Navigating the complexity of Salesforce features.

Recognizing these challenges is the first step toward finding solutions that ensure businesses can fully leverage the power of Salesforce.

Role of DataTools Pro in Enhancing Salesforce Data Management

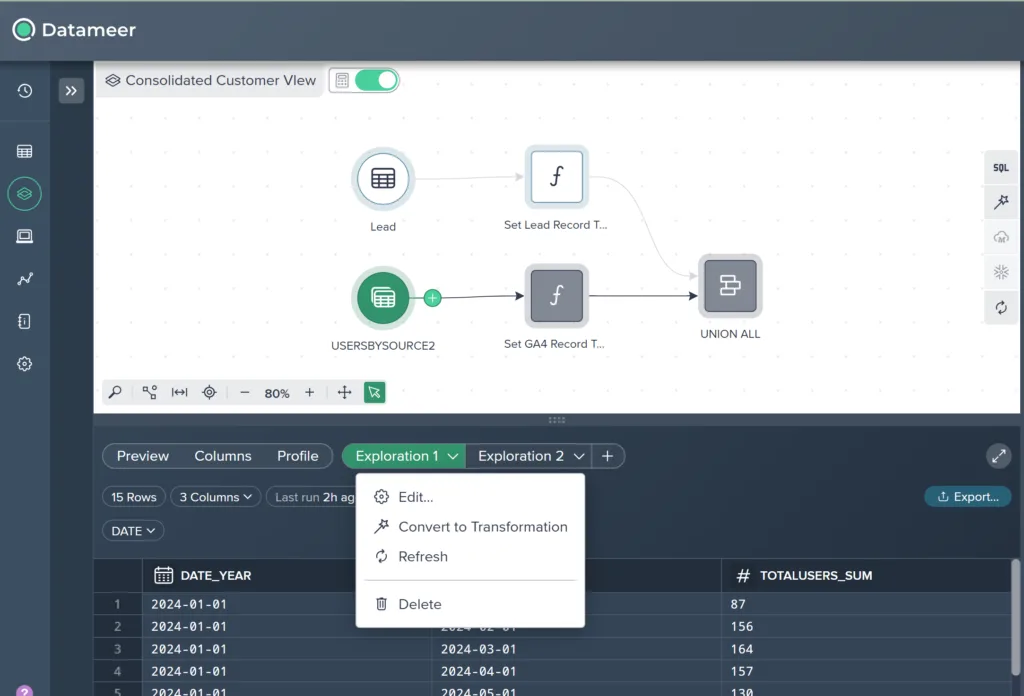

DataTools Pro is working on mapping integrations between Salesforce and other business applications, namely Hubspot and Snowflake. Keeping track of integration points outside of the code Salesforce product is important context for data and analytics teams who rely on the integrity of Salesforce data as data flows in and out.

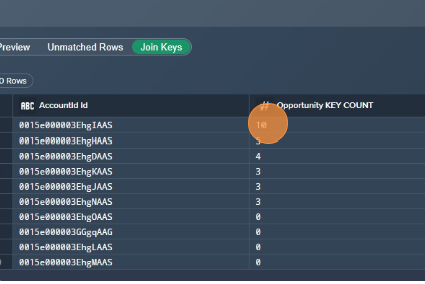

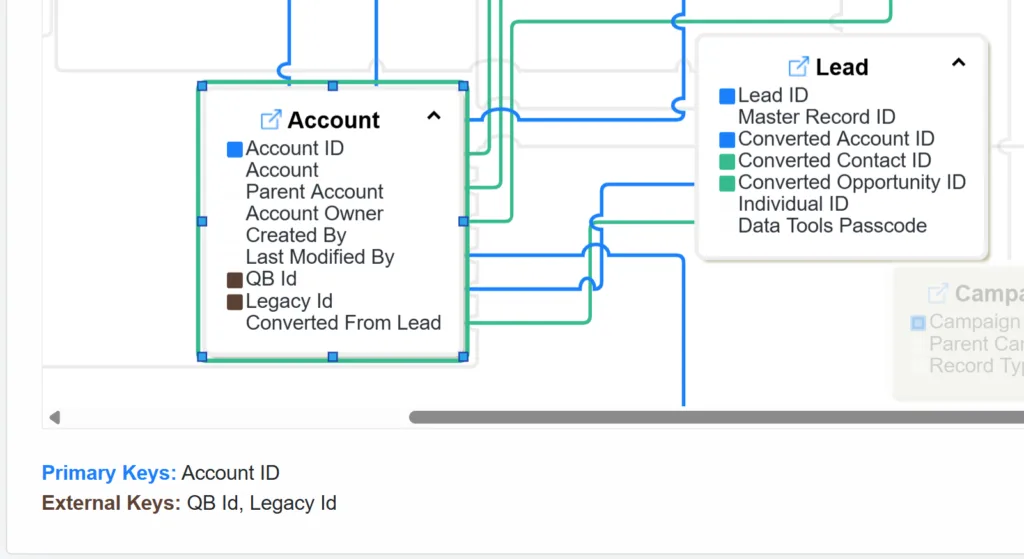

Another powerful tool is the Interactive Salesforce ERD (Entity Relationship Diagrams). It visualizes connections between Salesforce objects using intuitive color-coded diagrams, making it easier to understand complex relationships in your data. This clarity helps data teams identify and address discrepancies quickly, promoting more accurate data management. Recently we added support for external IDs

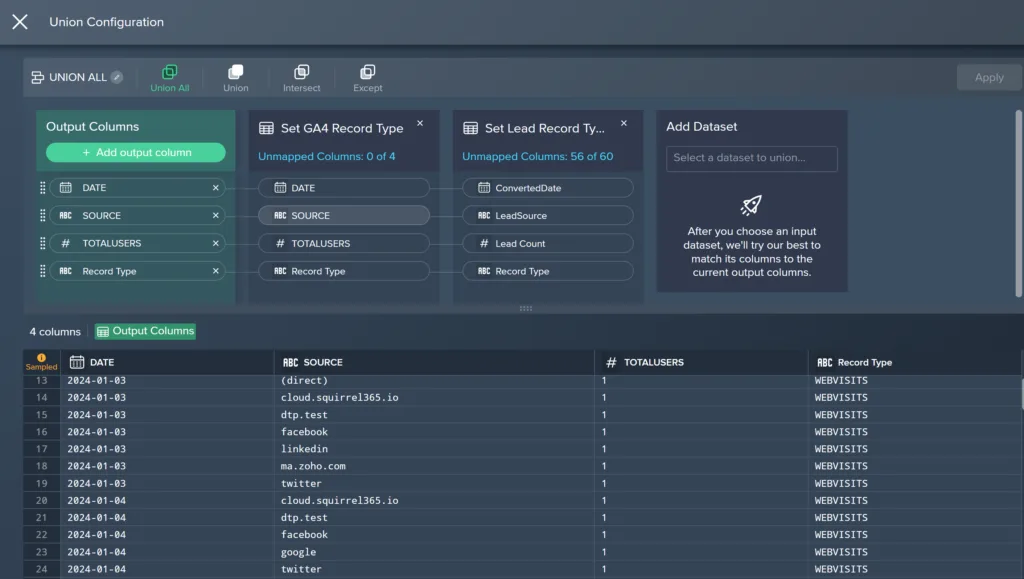

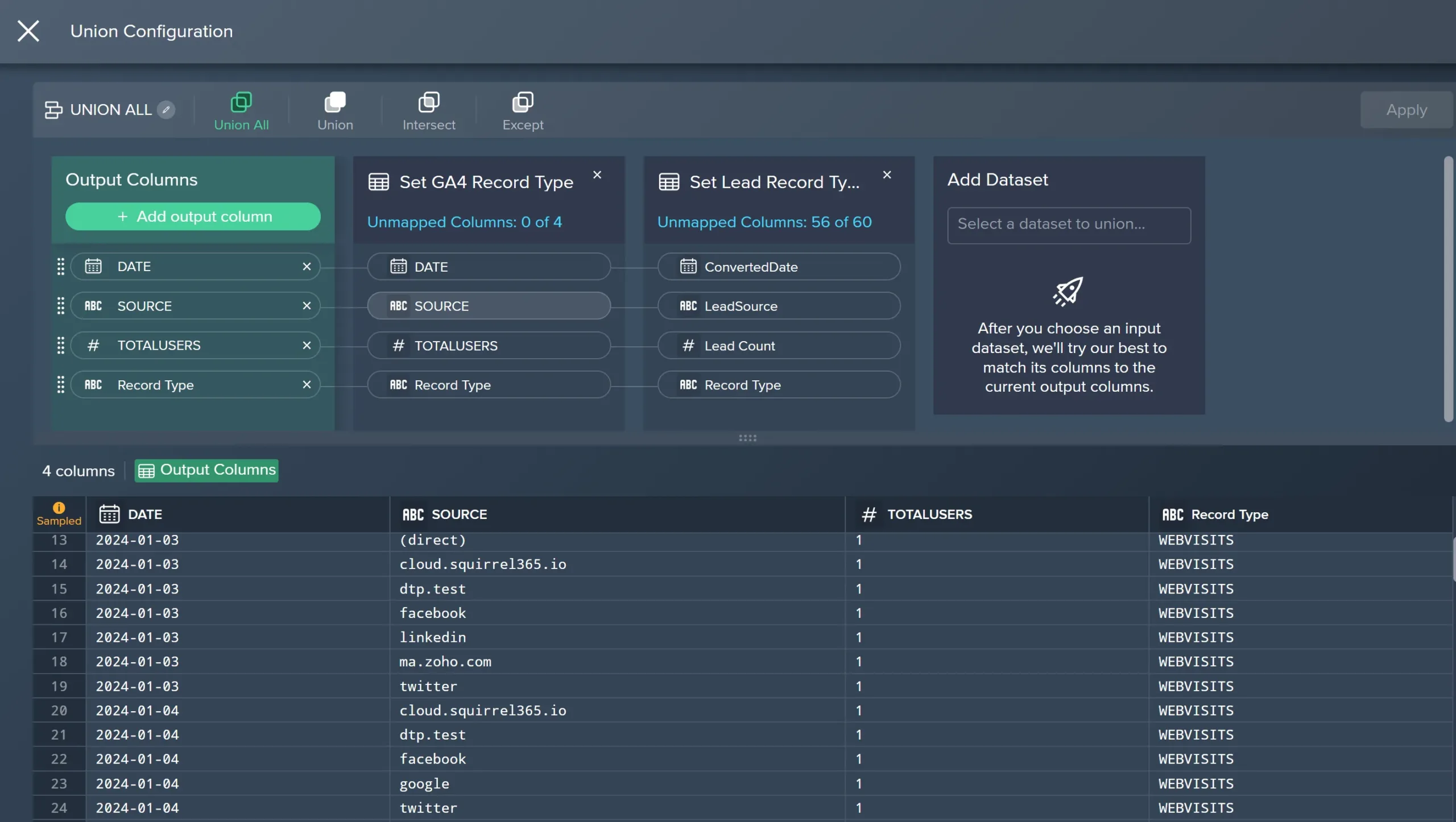

The Data Migration mapping tool simplifies the often-complex process of data migration. It efficiently maps data points from source systems, aiding in smooth transitions and minimizing errors. By replacing cumbersome spreadsheets with this tool, businesses can generate usable SQL code, improving the ease of managing data migrations.

In conclusion, DataTools Pro is progressing from a simple set of tools to access and export meta-data to delivering cog a complex Salesforce data management machine.

Best Practices for Streamlining Data Mapping and Integration

Smoothly migrating and integrating data within Salesforce can save time and reduce stress. Begin by thoroughly planning the data migration process. Clearly define your objectives and identify the data necessary to support these goals. Prioritize data quality by cleaning up duplicate or erroneous entries before the migration begins. This initial step ensures a smoother transition and reduces errors down the line.

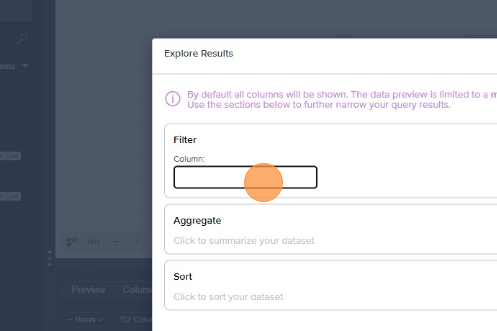

Utilize specialized tools like a Salesforce Data Dictionary to assist with mapping out your data objects and fields efficiently. These tools keep track of changes automatically and can simplify locating and mapping data points, creating a more seamless integration process. Avoid relying on complex spreadsheets by opting for software that can generate usable SQL code. This automation expedites migration and ensures accurate data mapping, reducing downtime and enhancing data reliability.

Establish a robust integration strategy. Utilize reliable APIs and connectors to ensure continuous data flow between Salesforce and other systems. This strategy will help eliminate data silos and maintain a consistent data landscape across your organization. Regular monitoring and updates are crucial to ensuring the integration remains efficient and effective.

By following these best practices, businesses can alleviate common data migration and integration problems, leading to more integrated data environments and improved business operations.

Conclusion:

Salesforce data management presents several challenges, but they can be effectively addressed with the right tools and strategies.

Prioritizing proper data management isn’t just about ticking a checkbox. It forms the backbone of informed decision-making and drives business success. By addressing data management proactively, businesses reduce errors, enhance security, and improve collaboration across departments. These improvements lead to more reliable analytics and stronger strategic outcomes.

Consider using advanced data management tools tailored for Salesforce and follow best practices to keep your data environment efficient and effective. Whether it’s through optimized reporting or seamless integrations, these efforts empower enterprises to transform data challenges into opportunities for growth.

With features that simplify data challenges and enhance usability, you can gain clearer insights and improve your decision-making processes. Take the next step with DataTools Pro and unlock the potential of your data today.