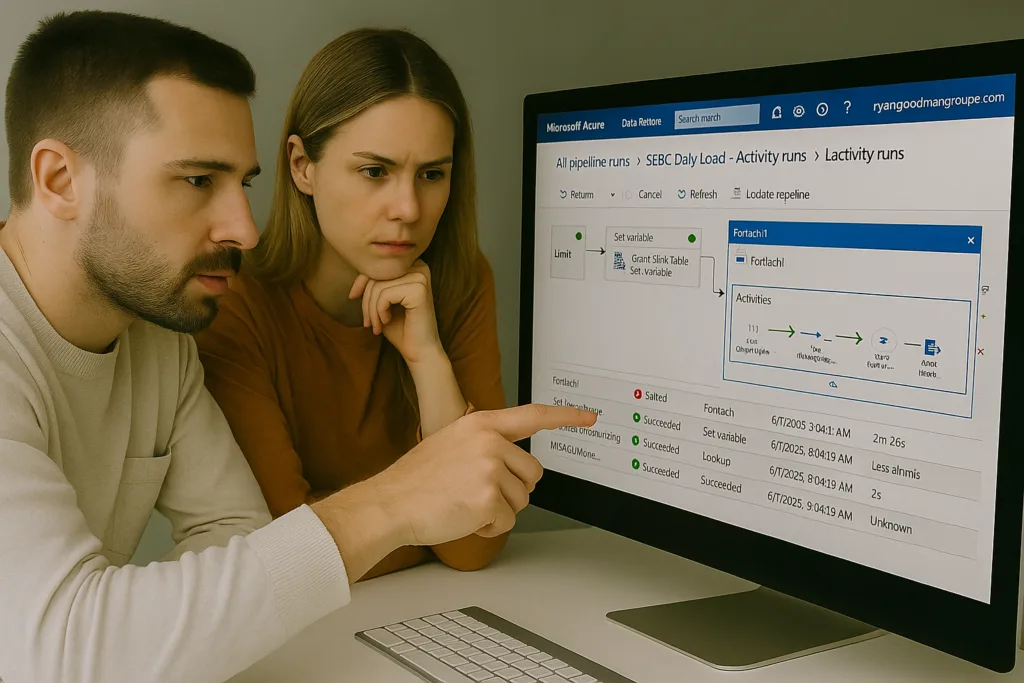

The ADF Salesforce Connector is one of many connectors that let you copy data from Salesforce to your data warehouse of choice. Azure Data Factory (ADF) and Salesforce are well integrated solutions that have gone through multiple iterations over the years. Having this connector provides seamless data transfer, helping businesses keep their records up to date and consistent across data platforms. However, just like any technology, the ADF Salesforce Connector can sometimes run into connection problems. Most of these problems are created by material changes to your source or sink system. These issues can disrupt workflows and lead to delays in data processing, which is why understanding how to address them is so important.

Problems with data pipelines due to change management can lead to inaccurate reporting or outdated information, both of which are headaches for any business relying on timely data. By getting a handle on standard maintenance and monitoring, you can eliminate most challenges. Having data operations running smoothly will strengthen your data management efforts so you can focus energy using data decision support, operations, analytics, and AI.

Identifying Common Connection Issues

Let’s dive into some typical connection issues users face with the ADF Salesforce Connector and how to recognize them:

1. Material Schema Changes: A change to your schema where fields are dropped or renamed, presents a tricky situation where some configuration approaches fail in ADF. In concept your ADF connections should have some level of resiliency to changes in your schema. How you design your workflows could impact how schema drift could impede on your ADF reliability.

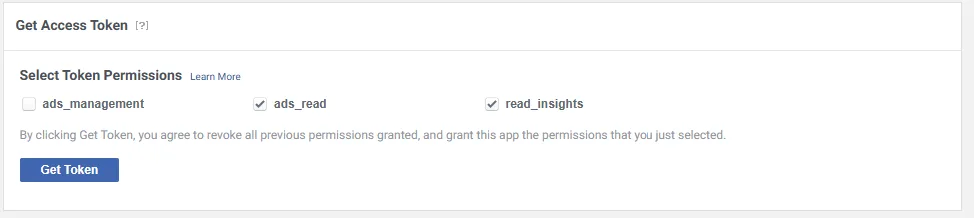

2. Authentication Failures and Permission Changes: The latest ADF integration requires your Salesforce admin to create a connected app. Its important that the run-as user is a system user and not a single individual contributor. We recommend a system user with permissions to objects that are approved for data extraction.

3. API Limit Exceedance: Salesforce has certain API call limits, and exceeding these limits can result in temporary connection blocks. ADF rarely if ever is the root cause of API Limit issues. ADF uses the Salesforce bulk API 2000 records per credit. Even with millions of records per day, the credit usage is low. We have however, seen other third party processes that make poor use of API credits cause ADF to fail at night.

4. Data Format Discrepancies: Mismatched data formats between Salesforce and ADF can lead to errors or incomplete data transfers.

To better tackle these challenges, it helps to think of them as puzzles where identifying the root cause is key. For instance, if you regularly encounter authentication failures, double-checking API settings and validating credentials can be a good starting point. With network issues, ensuring a stable and secure connection is crucial. Knowing the common types of issues can also help you prepare for them, so they don’t take you by surprise.

Understanding and recognizing these issues early on can save you a lot of time and trouble. By spotting potential problems quickly, you can take corrective action before they escalate, maintaining a smooth and efficient data integration process.

Best Practices for Maintaining a Stable Connector

Keeping the ADF Salesforce Connector running smoothly involves some regular attention and checks. Here are tips to maintain its stability:

– Regular Updates: Always keep your connectors up to date with the latest patches and enhancements. Updates often fix bugs and improve performance.

– Schedule Regular Maintenance: Set aside time for regular system checks and balances. This will help identify potential issues early on and avoid unexpected downtimes.

– Engage in Log Reviews: Reviewing logs can offer insights into potential warning signs or anomalies in the data flow. Addressing these early can prevent bigger issues later.

– Feedback Loop: Establish a feedback system where team members can report issues as they notice them. A reactive approach can often catch issues before they become major disruptions.

By integrating these practices into your routine, you will enhance not only the stability but also the efficiency of your data integration efforts. Monitoring and tweaking as needed will ensure a seamless and effective connection experience, reducing the likelihood of facing disruptive issues down the line.

Salesforce Azure DataFactory Tutorials

Connect Azure Data Factory to Salesforce.com (New 2025)

Salesforce data pipelines to Snowflake free template

Ensuring your data integration process runs smoothly is key to maintaining an effective workflow. If you needs some hands on help getting setup, we offer DataTools Doctors where we can hop in and get you sorted connecting and integration Azure DataFactory and Fabric with Salesforce and Snowflake..