Last month, I had an opportunity to roll up my sleeves and start building analytics with Snowflake MCP and Snowflake Semantic Views. I wanted to see how far I could push real-world analyst and quality assurance scenarios with Tableau MCP and DataTools Pro MCP integration. The results gave me a glimpse of the future of AI/BI with real, production data. My objective was to deliver a correct, viable analysis that otherwise would have been delivered via Tableau.

The time spent on modeling my data, providing crystal clear semantics, and using data with 0 ambiguity helps. My results delivered great results, but I ended the lab with serious concerns over governance, trust, and quality assurance layers. This article highlights my findings and links to step-by-step tutorials.

Connecting Claude, Snowflake MCP, and Semantic Views

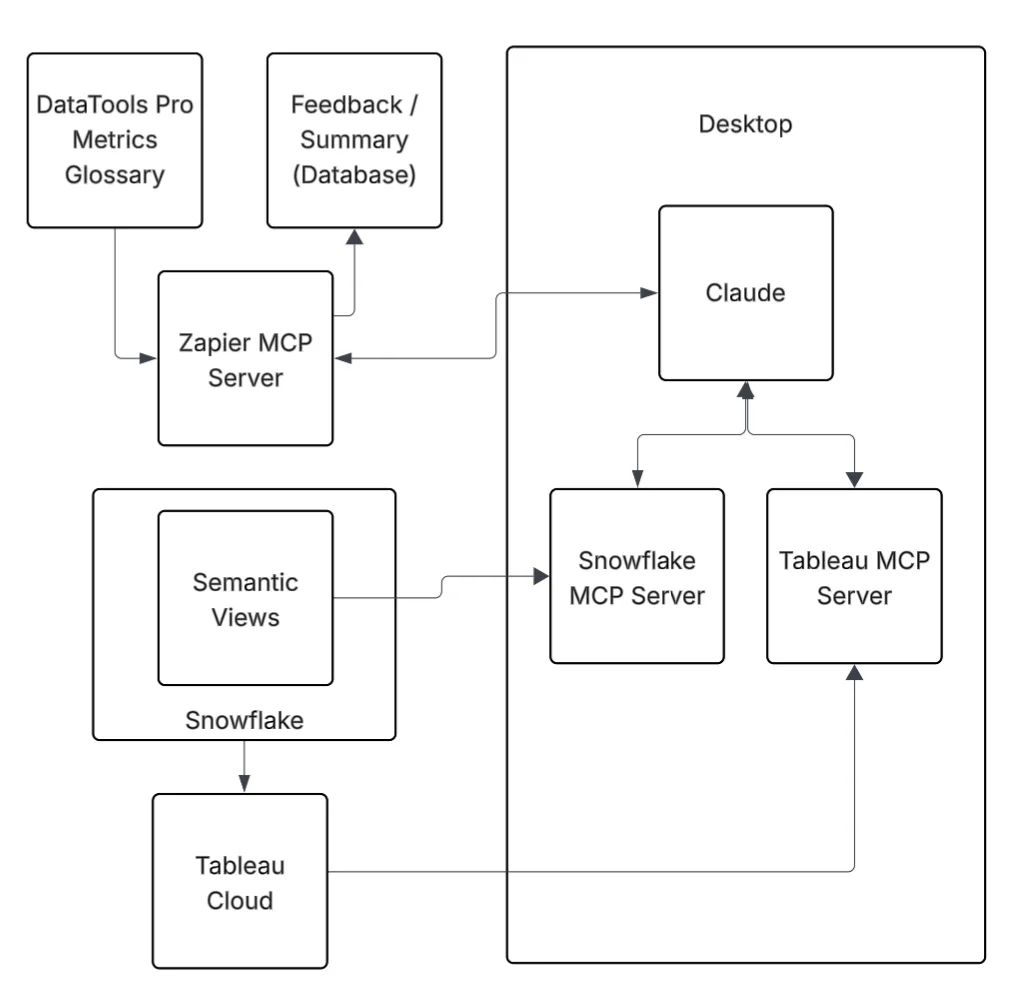

The first step to connect all of the components was building my Snowflake Semantic views. Snowflake MCP gave me the framework to orchestrate queries and interactions, and using Snowflake Semantic Views gave me the lens to apply meaning. All of my work and experimentation occurred in Claude. This gave me the AI horsepower to analyze and summarize insights. To connect Snowflake to Claude, I used the official Snowflake MCP Server, which is installed on my desktop and configured in Claude.

Together, these tools created a working environment where I could ask questions, validate results, and build confidence in the answers I got back.

- Snowflake MCP → Control and orchestration – Learn how to set up step by step

- Semantic Views → Governed metadata and meaning – Learn more about Snowflake Semantic Views

- Claude → An intelligent analyst partner

Creating Snowflake Semantic Views

With my Snowflake Semantic View setup, I spent some time researching and reading other folks’ experiences on semantic views. I highly recommend having a validated and tested Semantic view before embarking on AI labs. If you don’t know what metadata to enter into your Semantic View, seek additional advice from subject matter experts. AI can fill in blanks, but it shouldn’t be trusted to invent meaning without human oversight: Why AI-Generated Meta-Data in Snowflake Semantic Views Can Be Dangerous

Bottom line… Begin with a simple and concise Snowflake semantic model. Build clearly defined dimensions and measures. Use real-world aliases and refrain from using AI to fill in the blanks, unless your objective. Layer on complexity once you’re comfortable with the results.

What Worked Well

- Control over data access

Thankfully, the Snowflake MCP is limited to semantic views and Cortex search. The opportunity and value of Cortex search cannot be understated. I will cover that in another post. The idea of unleashing an AI agent with elevated permissions to write SQL on your entire data warehouse is a governance nightmare. Semantic Views gave me the ability to scope exactly what Claude could see and query. - Accuracy of results

The top questions I get during AI labs: “Is this information correct?” I had a validated Tableau dashboard on my other monitor to validate the correctness of every answer. - Simple to complex questioning

My recommendation with any LLM-powered tool is to start with high-level aggregate questions. Use these to build a shared understanding and confidence. Then, grounded on validated facts, you can drill down into more detailed questions with confidence. This approach kept me in control when the analysis moved beyond existing knowledge and available analysis.

Where I Got Stuck

Three challenges slowed me down:

- Metadata gaps – When the semantic layer lacked clarity, Claude produced ambiguous answers. It isn’t garbage in, garbage out problem…. It is me having a level of subject matter expertise that was not captured in my semantic layer or in a feedback loop to make the AI system smarter. LLM analysts feel less magical when you know the answers. That is where adding Tableau MCP allowed a pseudo peer review to occur.

- Over-scoping – When I got greedy and exposed too many columns, ambiguity crept in. AI responses became less focused and harder to trust. Narrower scope = better accuracy.

- Context Limits– I had Claude do a deep analysis dive. I also had it code a custom funnel dashboard that perfectly rendered a visual funnel with correct data. At some point, Claude explained that my context limit had been reached. My analysis hit a brick wall, and I had to start over. Claude is a general-purpose AI chatbot, but it was still disappointing to hit a stride and have to stop working.

Risks You Should Know

If you’re using AI to build your semantic layer, you need to be aware of the risks:

- AI-generated semantics can distort meaning. It’s tempting to let an LLM fill in definitions, but without context, you’re embedding bad assumptions directly into your semantic layer: Why AI-Generated Meta-Data in Snowflake Semantic Views Can Be Dangerous

- Do not give LLMs PII or Sensitive PII. As a rule of thumb, I do not add PII or sensitive PII into semantic models. I hope that at some point we can employ Snowflake aggregation rules or masking rules.

- Governance blind spots. Connecting the Snowflake MCP requires access from your desktop. For governance, we use a personal access token for that specific Snowflake user’s account. That ensures all requests are auditable. Beyond a single user on a desktop, it’s unclear how to safely scale the MCP.

- False confidence. Good syntax doesn’t equal good semantics. Always validate the answers against known results before you scale usage.

Final Take

Snowflake MCP and Semantic Views are still very much experimental features. They provide a glimpse of what will be possible when the barrier and access to governed, semantically correct data are removed.

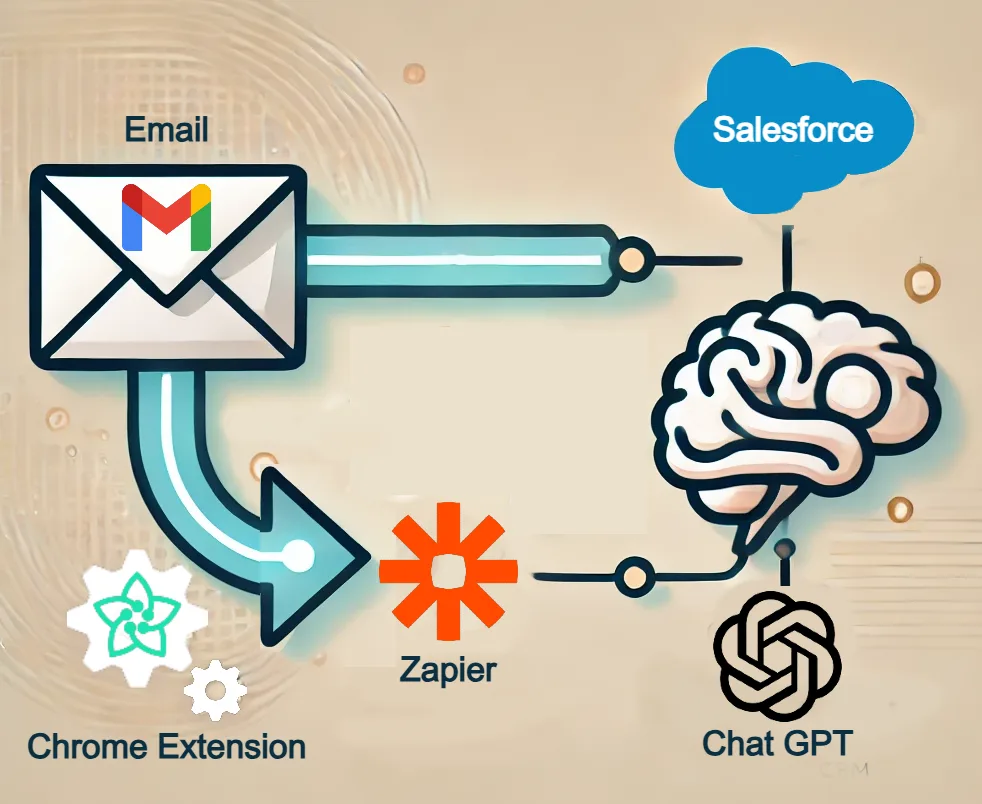

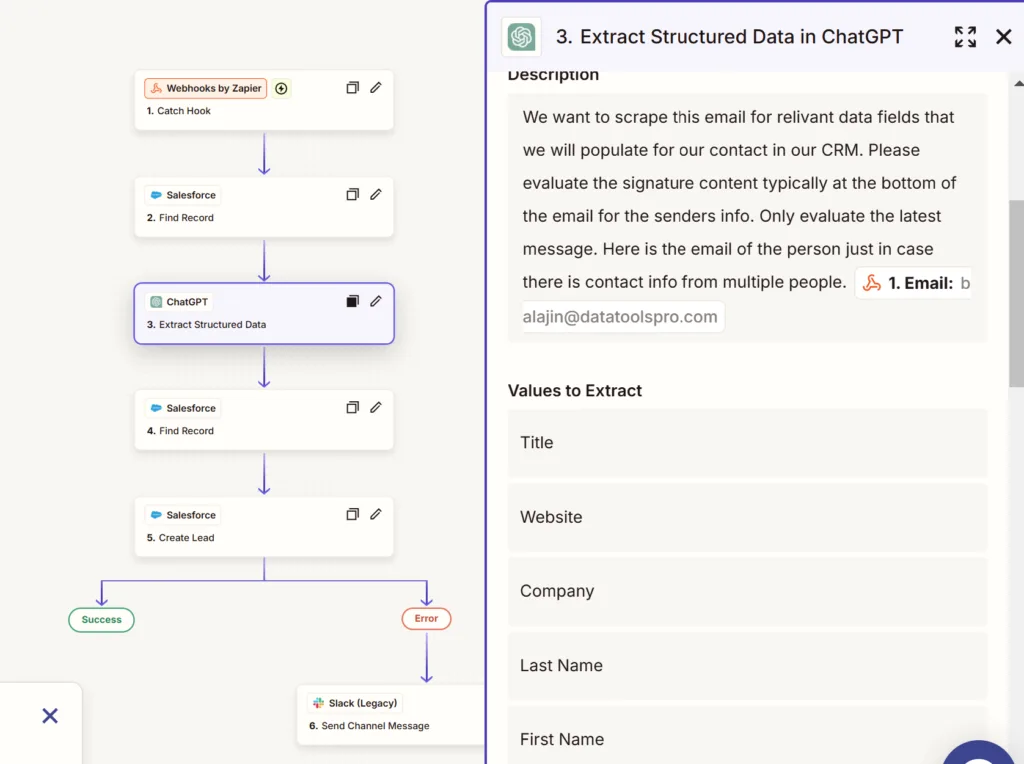

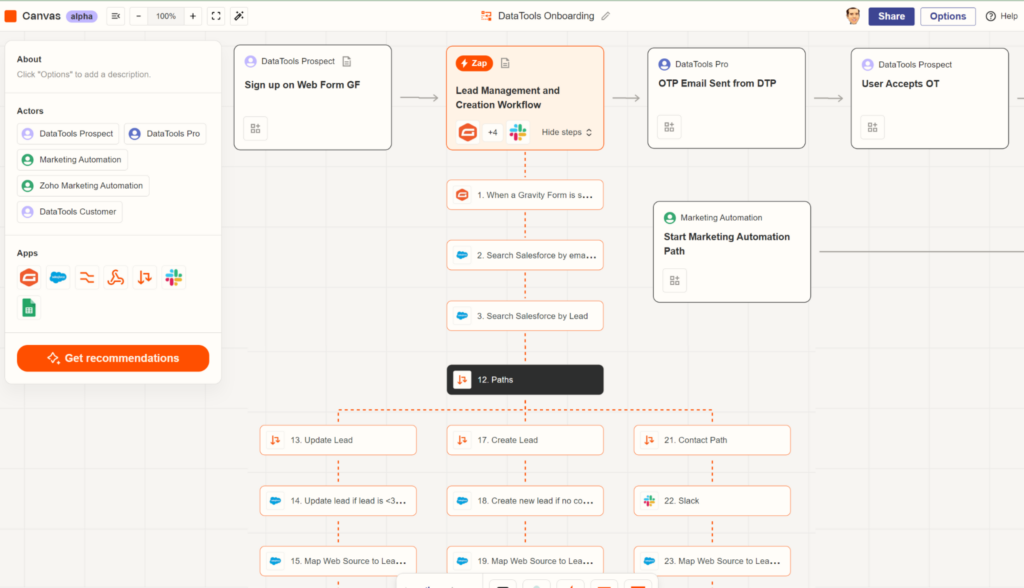

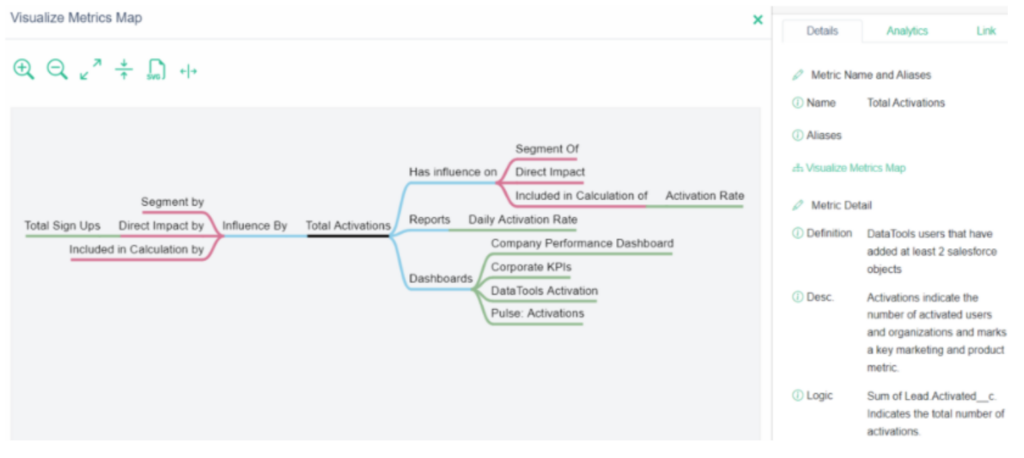

In my case, I employed DataTools Pro for deeper metric glossary semantics and a writeback step via Zapier to capture learnings, re-directions, and insights for auditing purposes. If you would like assistance setting up a lab for testing, feel free to contact us to set up a complimentary session