Data mapping is like drawing a detailed road map that connects your information systems. Especially in Salesforce, mapping helps data from different sources talk to each other. With the right map, information flows smoothly, making it easier for teams to work together and achieve their goals.

Understanding Salesforce Data Mapping

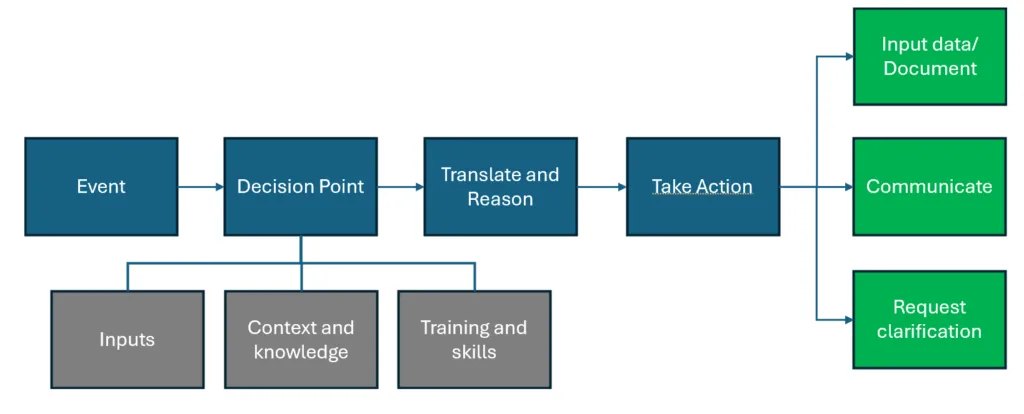

Data mapping in Salesforce involves connecting data from different sources to ensure compatibility and consistency. This process outlines how data fields from one system match with another. It’s essential for integrating various datasets so they work together smoothly. Without proper mapping, data can become mismatched, leading to errors.

Data mapping is crucial for managing information efficiently. It involves creating a blueprint for data that guides how information flows through systems effectively. In Salesforce, accurate data mapping means that all parts, like contacts and transactions, align correctly. This alignment is necessary for generating accurate reports and analytics, and it supports seamless data integration across systems.

However, data mapping comes with its challenges. One common challenge is dealing with disparate data sources. These sources might have different formats, naming conventions, or structures, making synchronization tricky. Another issue is ensuring that changes in one system reflect accurately in another. This requires constant monitoring and updates.

Another common challenge is managing large amounts of data. As companies grow, their data sets expand, leading to complexity during mapping. Handling these vast datasets without proper tools can lead to mistakes. Also, coordinating between different teams to standardize mapping conventions can be difficult, especially when team members use different tools or methods.

Leveraging DataTools Pro for Efficient Data Mapping

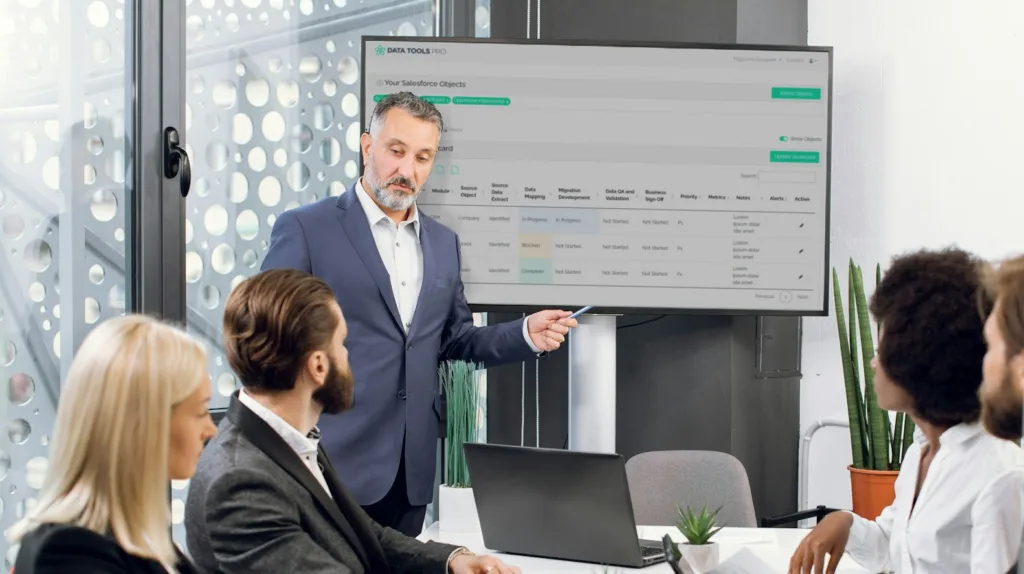

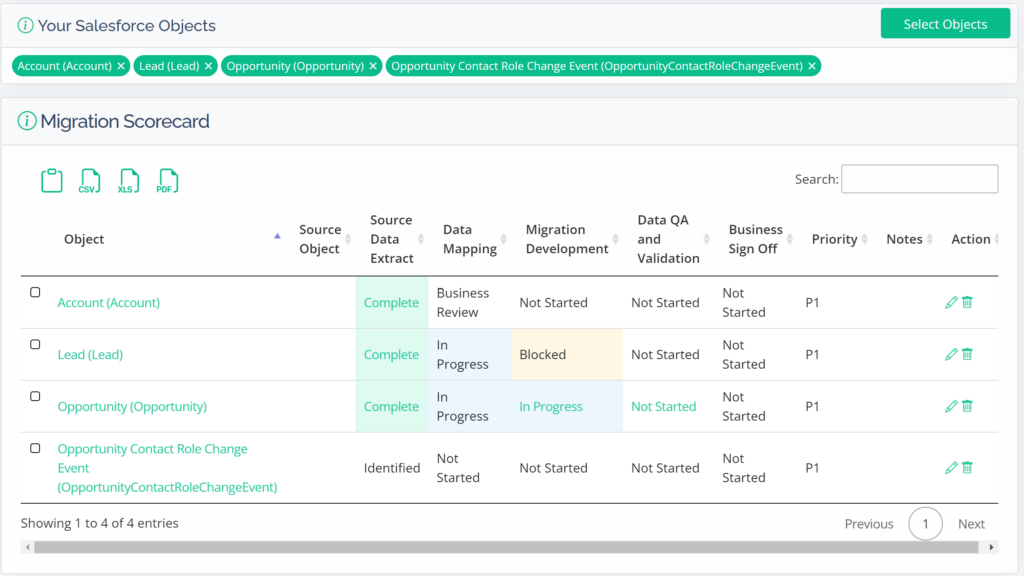

DataTools Pro simplifies the data mapping process through innovative features that enhance accuracy and efficiency. The tool automates many aspects of data mapping, reducing the workload for teams. With real-time updates, teams can ensure that their data remains consistent and accurate across systems.

One standout feature is automated data mapping, which links data fields without manual intervention. This automation minimizes human errors and speeds up the process, allowing teams to focus on more strategic tasks. Additionally, DataTools Pro provides intuitive interfaces that make it easy for users to visualize and manage data relationships.

Automated tools, like DataTools Pro, offer several advantages over manual data mapping methods. First, they save time by quickly processing large volumes of data. Teams no longer need to spend endless hours matching fields manually. Automation also ensures more precise mapping, reducing the risk of mismatches that could lead to inaccuracies.

Furthermore, these tools enhance collaboration. By providing a standardized approach, everyone involved can see and understand the data mappings without confusion. This transparency fosters better teamwork and ensures everyone stays on the same page regarding data integration goals.

In summary, DataTools Pro not only simplifies the mapping process but also provides a reliable foundation for data management. Its advanced features promote accuracy and efficiency, empowering organizations to handle data with confidence and achieve better results.

Practical Tips for Streamlining Data Mapping

To make data mapping efficient, follow some best practices that help avoid common issues.

1. Understand the Data: Before starting, ensure you know the data types and formats from both source and target systems. Understanding these details helps in setting precise mappings.

2. Consistent Naming Conventions: Use consistent names for fields across systems. This makes it easier to map similar fields without confusion.

3. Use Templates: If you handle repetitive tasks, create templates for similar mapping projects. This saves time and ensures uniformity.

4. Document Everything: Maintain clear documentation of mappings. This helps in future updates and troubleshooting.

5. Involve the Right People: Engage data experts and those familiar with the systems being integrated. They can foresee potential challenges and solve issues promptly.

Avoid common pitfalls during data mapping. One mistake is skipping data validation. Always check to ensure data moves correctly from one point to another. Ignoring updates can also be a problem. Regularly review and adjust mappings as systems evolve. Failing to test thoroughly can lead to issues, so always conduct tests before finalizing mappings.

Maximizing Business Value through Effective Data Mapping

Effective data mapping plays a pivotal role in enhancing decision-making. When data is accurately mapped, it provides reliable insights that guide strategic actions. Teams can pinpoint exactly what’s happening in their operations, leading to informed business decisions.

Accurate mapping also boosts operational efficiency. By ensuring data flows correctly across systems, businesses save time spent on error correction and rework. This efficiency translates into cost savings and improved service delivery. With everything running smoothly, teams can focus on achieving goals without data-related disruptions.

Data mapping is essential for successful data migrations and transformations. When transferring data between systems, precise mapping ensures nothing gets lost in translation. This is vital during system upgrades or when integrating new technologies. Proper mapping ensures data remains consistent and usable in its new environment.

Conclusion

Salesforce data mapping is a powerful component for managing and optimizing business data flows. By understanding its challenges and leveraging effective tools like DataTools Pro, organizations can enhance data accuracy and reliability. Following best practices ensures that data mapping not only streamlines operations but also maximizes the strategic value of data. Accurate and efficient data management supports robust decision-making, paving the way for business growth and innovation.

To unlock the full potential of seamless data integration, try DataTools Pro today. Our advanced tools simplify your data mapping processes, ensuring efficiency and accuracy across all your systems. Start your journey toward better Salesforce data analytics with us Pro and experience the difference it makes for your business.