Our Azure DataFactory template as a free resource to remove complexity to create Salesforce Data pipelines to Snowflake. You extract 1 or 50 tables from your Salesforce organization with ease. Our DataTools Pro template was created as 3 modular building blocks that can be re-used for other Snowflake / Salesforce data integrations within ADF.

Download Free ADF Template

Install and use the ADF template

Need More Salesforce data pipelines?

We have additional templates for databases and enterprise data sources. Feel free to contact us to learn more about premium templates and our DataTools Doctors services.

Template FAQ: Salesforce data pipelines to Snowflake

What use case is this template designed for (complete refresh or incremental?

The current version of this template was designed for organizations that require frequency of data refresh in Snowflake to be daily or periodic. This template will truncate and replace your Salesforce data which ensures from the point of data extraction data always matches Salesforce. The template does not support incremental data refresh as data changes. This template can be modified to support incremental loading of data. If this is a requirement, feel free to contact us for more information

What is required to run this ADF Template?

Detailed configuration instructions are provided below, but you need access to your Salesforce org, Snowflake, Azure Blob Storage, and

Is this used for 1 time setup or daily data operations?

You can use this template for accelerated setup, change management, and daily scheduled data movement from Salesforce to Snowflake. We have recently updated our template to cover the most common use cases. Feel free to share your requirements and we are happy to consider adding more enhancemnets!

How frequent does the data refresh?

This template was designed for daily scheduled or period data movement (2-3 times) from Salesforce to Snowflake. As the volume of data and frequency for refresh increases, the need for incremental refresh may be required.

Salesforce

You will need Salesforce username, password and user token generated via connected app configuration. This will require Salesforce elevated permissions. We have created a step by step tutorial to streamline the process and ensure it is setup properly.

DataTools Pro Step by Step Tutorial

More info from Azure DataFactory

Snowflake

You will need a Snowflake linked service

When you create your linked service, you will want to ensure the ADF system user has a role that has been granted the following following privileges on the database you are using for this project. This following code will execte when you provide the database name and role name.

GRANT CREATE EXTERNAL TABLE ON FUTURE SCHEMAS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT CREATE SCHEMA ON DATABASE <db_name> TO ROLE <role_name>;

GRANT CREATE STAGE ON FUTURE SCHEMAS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT CREATE TABLE ON FUTURE SCHEMAS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT CREATE TEMPORARY TABLE ON FUTURE SCHEMAS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT CREATE VIEW ON FUTURE SCHEMAS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT INSERT ON FUTURE TABLES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT READ ON FUTURE STAGES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT SELECT ON FUTURE TABLES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT SELECT ON FUTURE VIEWS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT TRUNCATE ON FUTURE TABLES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT UPDATE ON FUTURE TABLES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT USAGE ON FUTURE FUNCTIONS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT USAGE ON FUTURE PROCEDURES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT USAGE ON FUTURE SCHEMAS IN DATABASE <db_name> TO ROLE <role_name>;

GRANT USAGE ON FUTURE STAGES IN DATABASE <db_name> TO ROLE <role_name>;

GRANT WRITE ON FUTURE STAGES IN DATABASE <db_name> TO ROLE <role_name>;Blob Storage with Shared access signature (SAS) based Authentication

We recommend setting up a blob storage service specifically for your data lake and limit access exclusively to the Azure process. The role of Azure blob storage is temporary data staging while transmitting data from Salesforce to Snowflake, and logging. Data is NOT persisted in Azure blob storage.

You will need a need a blob storage container linked service configured with Shared access signature. The following articles can be provided to your Azure administrator to ensure the right configuration settings and keys are setup in Azure DataFactory.

1 Azure Website: Shared access signature authentication configuration

Template Considerations for Security and IT Permissions

To use the Azure DataFactory template, you will need access to 3 linked services. We cover setup of these services at DataTools to make it simple and concise.

Salesforce Connected App / Linked Service

ADF Template Install Directions

- Download the Zip Template file labeled “Data Tools Pro 1 Click Data Lake”

- Open Azure Data Factory

- Click “+” Button Next to Pipelines

- Click Add from Template

- Select the downloaded zip file from your desktop

- Follow the Template Prompts to select your Salesforce, Snowflake, and Blob storage linked services

- Select the canvas and click parameters

- Fill in the parameters for DatabaseName, Schemaname, and optional prompts for creating a new schema or using an existing schema.

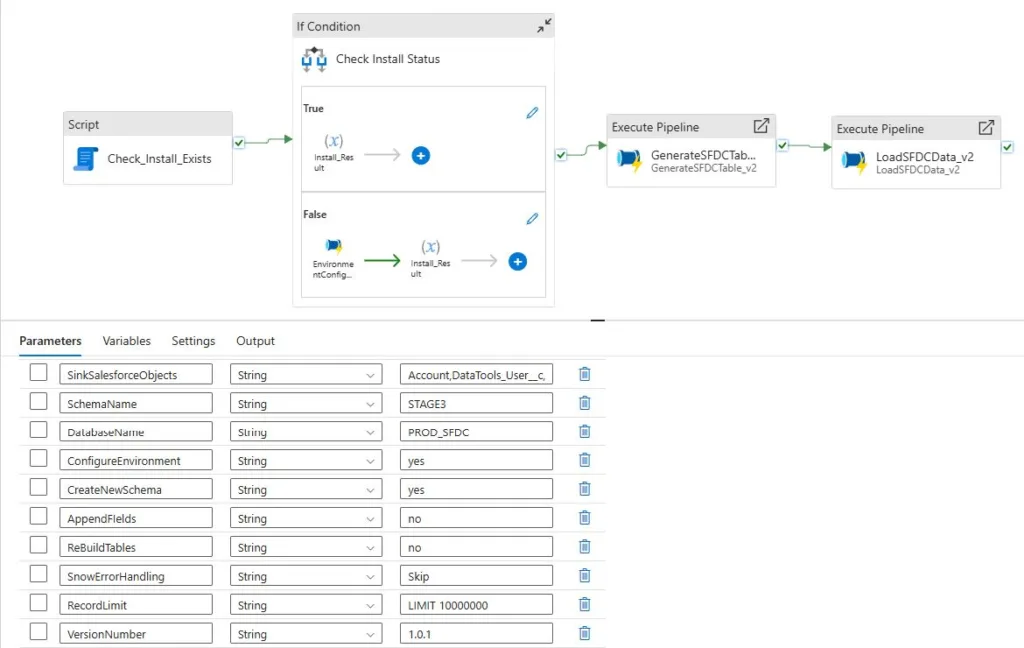

Salesforce to Snowflake ADF Template Usage

- When you complete the template import, click on the data pipeline labeled: “DataTools Pro SFDC to Snowflake”

- When you open the template, select the “Parameters” tab

- Within the ADF template you will configure the following ADF parameters:

SinkSalesforceObjects: Comma seperared lists of Salesforce objects.

This list is case sensitive and should match your Salesforce organization’s Object API Names. When Snowflake tables are created, they are converted to case insensitive (upper case).

DatabaseName: Your Snowflake Database where your schema and stage tables will be created and populated with data.

SchemaName: Snowflake schema name where you will stage and create your Salesforce data lake.

ConfigureEnvironment: Will run the configuration steps which completes the following:

Installs 2 stored procedures called “ADFMAPPINGFROMSFDCMETADATA” and “DDLFROMMETADATA”

Adds a log table called “LOGTABLE”

Adds a meta data table called “SFDC_METADATA_STAGE_TEMP” and “SFDC_METADATA_STAGE”

CreateNewSchema: This function will generate a brand new schema in your database to insert and create your data lake. This will require elevated permissions to create a schema on an existing databse in your Snowflake account.

| Accepted Value | |

|---|---|

| yes | Will create or replace your schema in your Snowflake DatabaseName using SchemaName. Requires schema creation permissions. |

| no | Will use the the SchemaName to perform all pipeline functions |

AppendFields: This function will append new fields to your meta data if they are added to your source table.

| Accepted Value | |

|---|---|

| yes | Will append new fields in your source table |

| no | Will ignore new fields in your source table |

SnowErrorHandling

When copying data to Snowfake, we have enabled error handling.

| Accepted Value | |

|---|---|

| CONTINUE | Will ignore errors and insert only valid records in your data set. |

| SKIP_FILE | Will the entire file resulting in 0 records inserted. |

| ABORT_STATEMENT | Will fail the pipeline |

RecordLimit: Record limit was designed to help with testing and debugging to reduce the size of your object queries.

Accepted string value: “LIMIT 1000000”

VersionNumber

VersionNumber is set for development purposes. We recommend not updating this parameter to conflict with backwards compatibility.