If you plan to load data from an AWS S3 stage into Snowflake, it requires configuration steps for the following services. The official, up to Snowflake S3 documentation is short and vague if you want a secure stage. We cover both products so you your AWS and Snowflake admins can have a complete, secure configuration.

- S3 – Create a bucket specifically for Snowflake

- IAM Role – Create an IAM Policy. You will need to provide your AWS admin with

- Snowflake – Obtain your STORAGE_AWS_ROLE_ARN and S3 Bucket ARN from your Salesforce Admin.

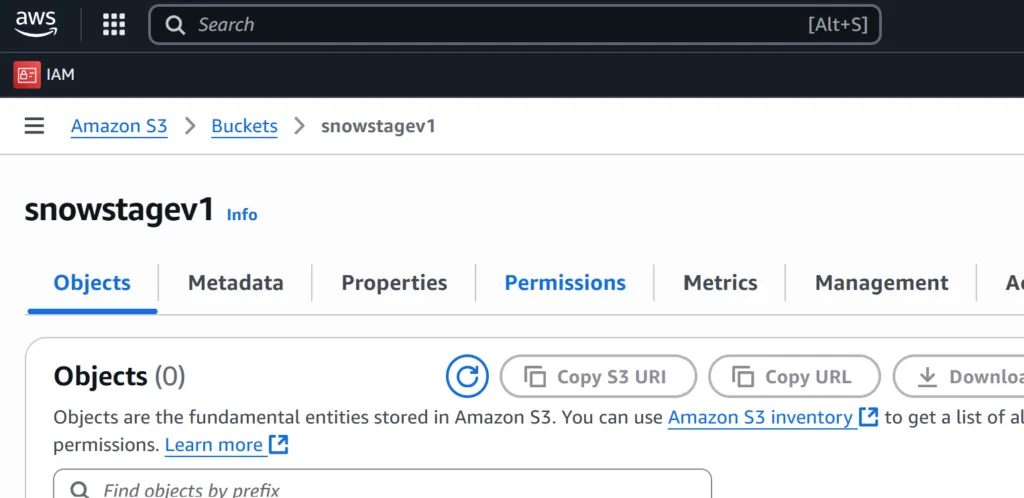

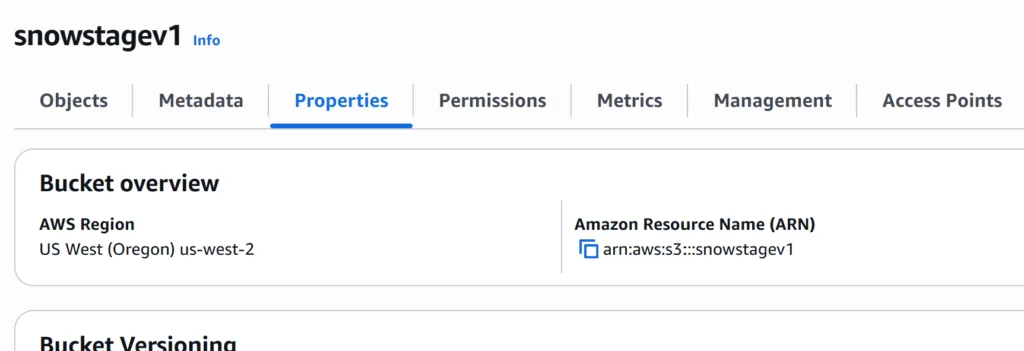

AWS- Create an S3 Bucket that will be used as a Stage

Click “Create bucket”.

Give your bucket a name (e.g., my-snowflake-data-bucket) and choose a region (e.g., us-west-2).

Leave all other settings as default unless you have specific needs (e.g., encryption, public access block).

Click Create bucket.

Copy the bucket ARN. In this example we named our stage “snowstagev1”

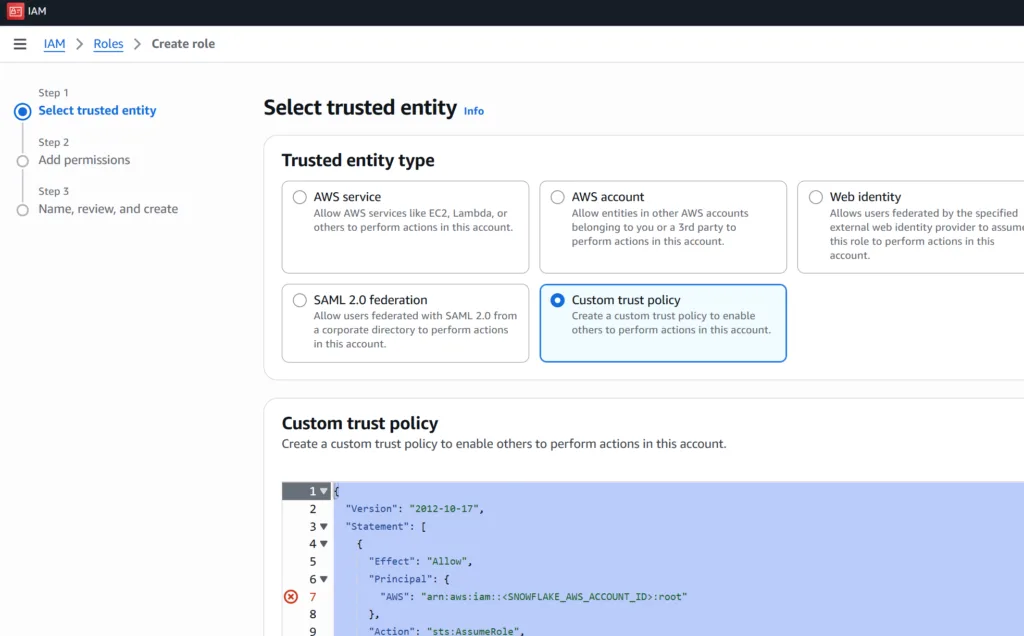

AWS – Create an IAM Role

This step will require assistance from your AWS Administrator.

Background: We are creating an AWS role that restricts Snowflake to use your S3 bucket as an external stage. Additional documentation from Snowflake:

- Go to the IAM Console → Roles→ Click “Create role”.

- Select Custom trust policy

Enter the following policy template. At this point you just need to fill in your AWS Account ARN.

NOTE: “PLACEHOLDER_EXTERNAL_ID” will come from Snowflake and you will complete that in a later step.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<<PLACEHOLDER_STORAGE_AWS_IAM_USER_ARN>>:root"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "<<PLACEHOLDER_STORAGE_AWS_EXTERNAL_ID>>"

}

}

}

]

}For step 2, you will skip adding permissions. You will complete this step later with a custom permission to your S3 bucket.

Snowflake – Create your Integration and Stage

Open up a Snowflake workbook or worksheet.

- Name your Storage Integration

- Add your AWS account ID

- Add your bucket name created in AWS

//CREATE STORAGE INTEGRATION TO TO ACCESS YOUR STAGE

CREATE STORAGE INTEGRATION <<YOUR_STORAGE_INTEGRATION_NAME>>

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = 'S3'

ENABLED = TRUE

STORAGE_AWS_ROLE_ARN = 'arn:aws:iam::<<YOUR_ACCOUNT>>:role/<<YOUR_ROLE_NAME>>'

STORAGE_ALLOWED_LOCATIONS = ('s3://<<YOUR_BUCKET_NAME>>/');Acquire your stage details from from Snowflake to grant access to your AWS Account

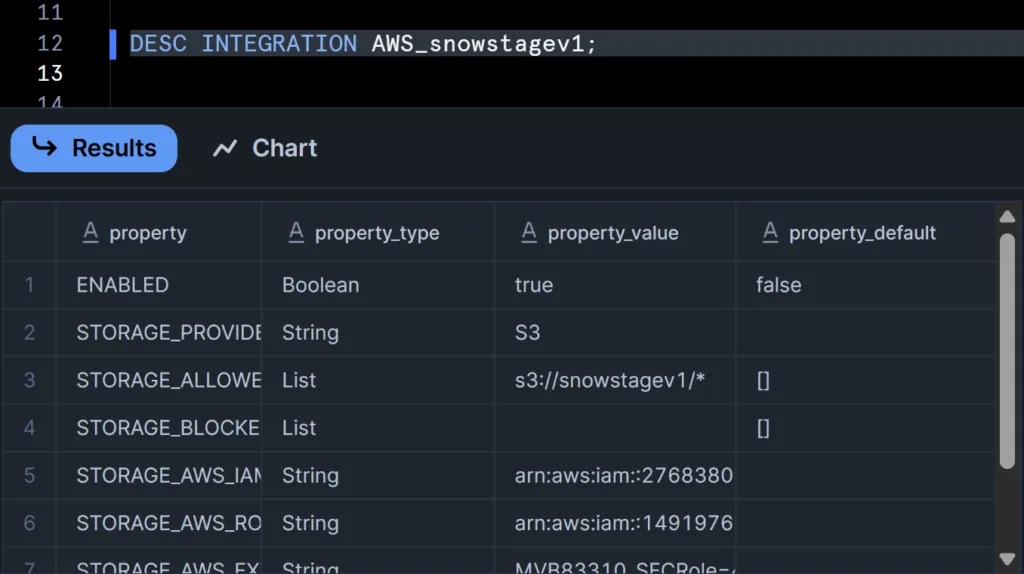

//VIEW STAGE DETAILS

DESC INTEGRATION <<YOUR_STORAGE_INTEGRATION_NAME>>;

Copy the following fields and provide them to your AWS AdminSTORAGE_AWS_IAM_USER_ARN → extract Snowflake’s AWS account ID only. It should be the numeric value.

STORAGE_AWS_EXTERNAL_ID

AWS

Copy the STORAGE_AWS_IAM_USER_ARN and STORAGE_AWS_EXTERNAL_ID

AWS Complete your Role Permissions

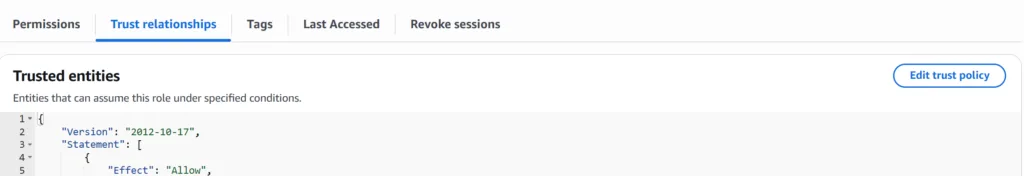

Open your custom role, and edit the trust policy

Copy the IDs provided by your admin into the respective placeholders

STORAGE_AWS_IAM_USER_ARN STORAGE_AWS_EXTERNAL_ID

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<<PLACEHOLDER_STORAGE_AWS_IAM_USER_ARN>>:root"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "<<PLACEHOLDER_STORAGE_AWS_EXTERNAL_ID>>"

}

}

}

]

}AWS Set Permissions for your Role to your S3 Bucket

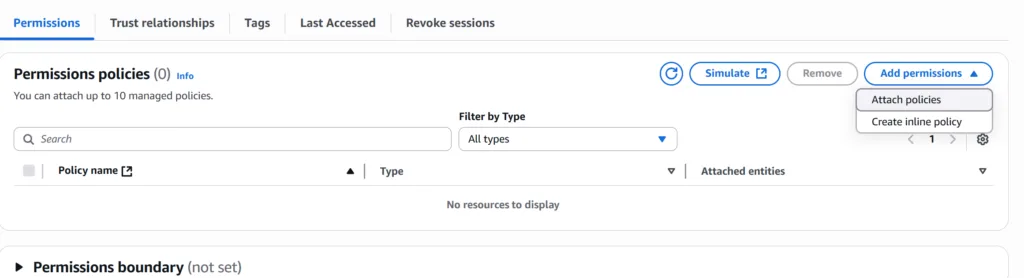

For your role, click the Permissions tab

Click “Add Permissions” and then “Create inline policy”

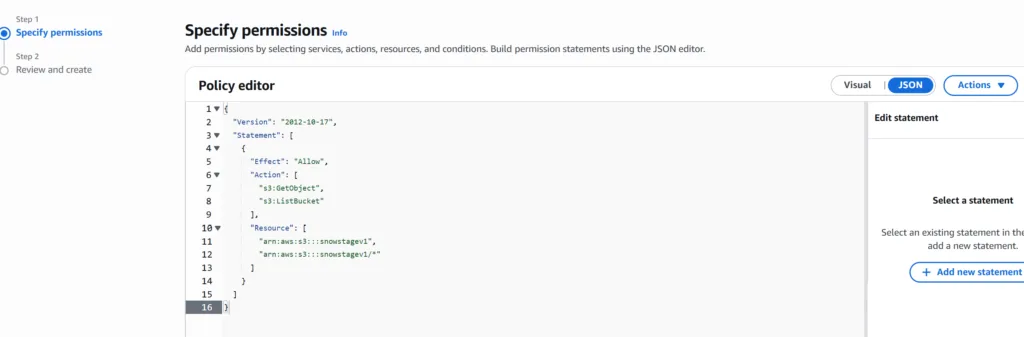

Copy the following policy which is designed to grant read-only access from Snowflake to load data.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::snowstagev1",

"arn:aws:s3:::snowstagev1/"

]

}

]

}

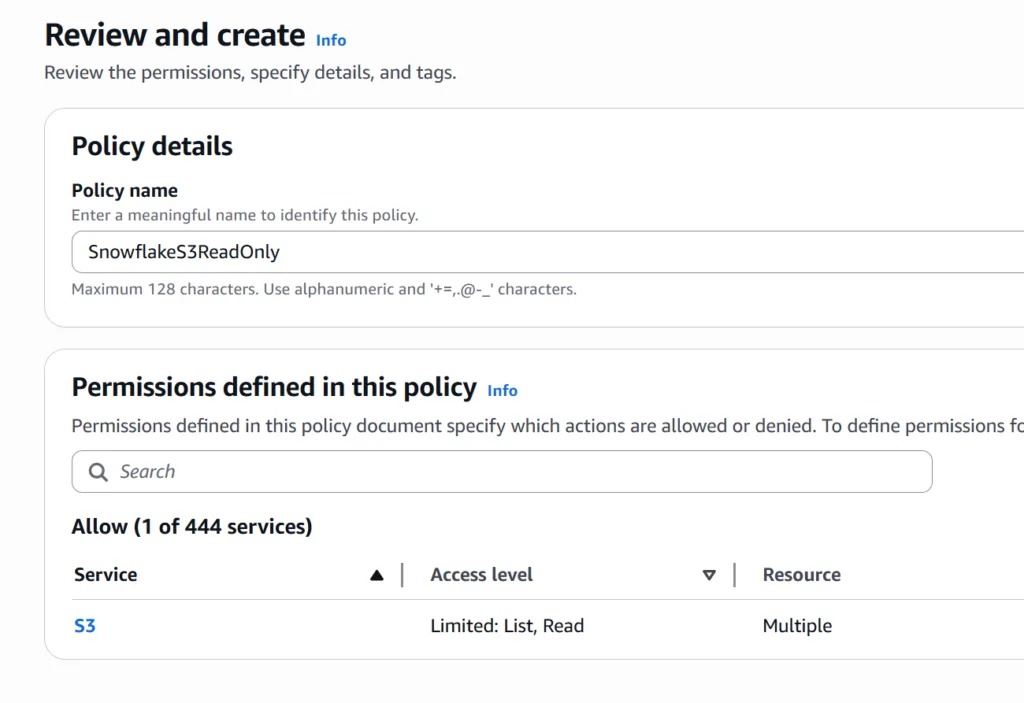

Name your trust policy something like Snowflake S3 Read Only.

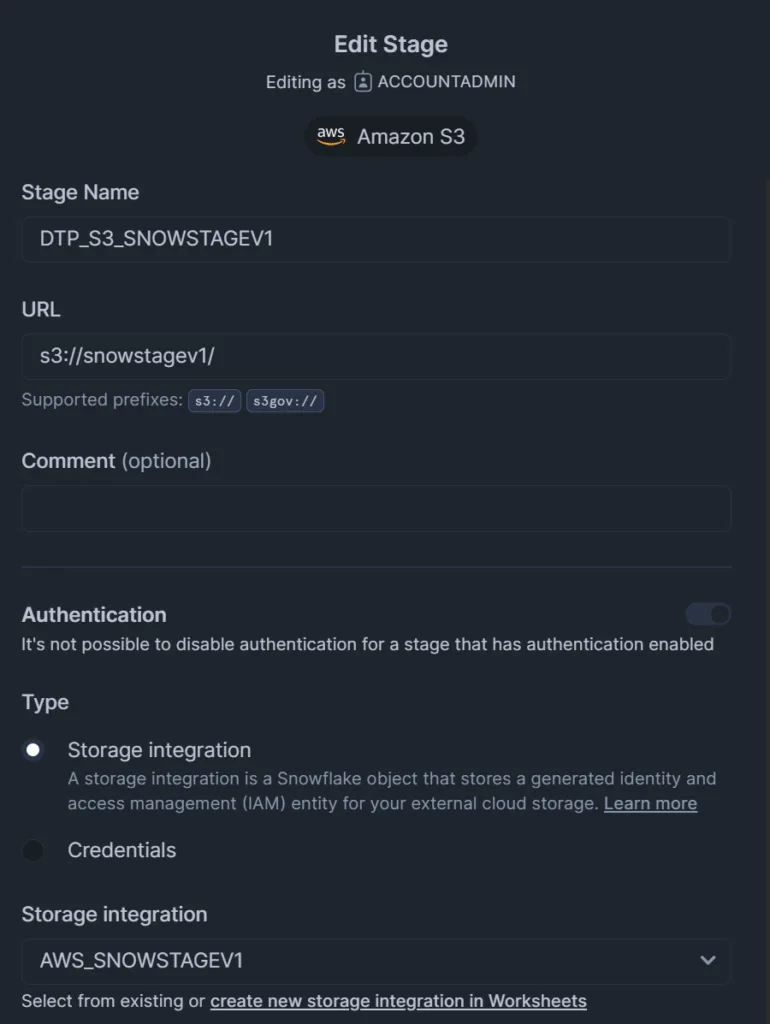

Snowflake Create your Stage with Storage Integration

You can create your stage in Snowsight. Simply go to the database

Click Create > Stage > AWS S3

Add a Stage Name that is descriptive for your Snowflake developers

Enter the URL of your S3 bucket. You can include subfolders if you have multiple paths that you want to expose for different business purposes.

Select your Storage Integration that you configured.

Here is the SQL equivalent:

//CREATE STAGE WITH STORAGE INTEGRATION

CREATE STAGE <<STAGE_NAME>>

STORAGE_INTEGRATION = <<YOUR_STORAGE_INTEGRATION_NAME>>

URL = 's3://<<YOUR_BUCKET_NAME>>/';

NOTE: Make sure you include the trailing “/” in your S3 bucket URL.